Saturday 3 July 2010

ASIWebPageRequest - a new class for downloading complete webpages

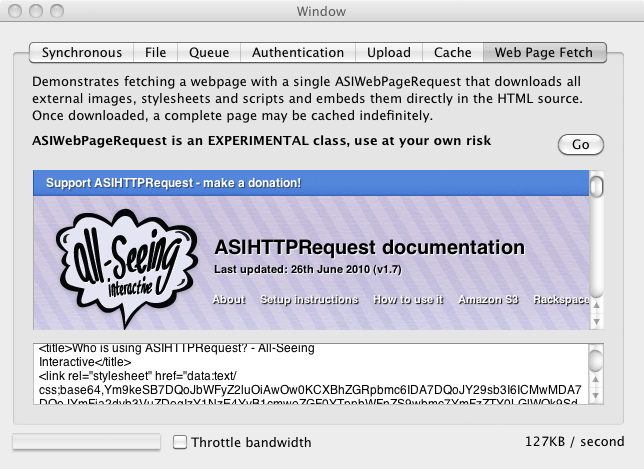

ASIWebPageRequest is a new experimental addition to the ASIHTTPRequest family. It can be used to download a complete webpage, including external resources like images and stylesheets, in a single request.

Once a web page is downloaded, the request will parse the content, look for external resources, download them, and insert them directly into the html source using Data URIS. You can then take the response and put it directly into a UIWebView / WebView on Mac.

Why do this?

There are a number of advantages:

- You have access to the whole page (including external resources) as a single string. This means you can cache the whole page indefinitely, which may be especially useful on iOS, where the WebKit browsing engine might not cache resources as much as you’d like. If a webpage is already cached, you can easily display it even if there is no internet connection available

- You can more easily connect to pages that require authentication not just for the page itself, but for external resources too. This can be tricky to get right otherwise, especially if you need to use an authentication scheme like NTLM on iOS.

- In future, you might be able to track the load progress for a whole page, or replace particular elements in the page easily with custom content (ASIWebPageRequest does not currently support either of these, but it could do in future)

How it works

ASIWebPageRequest works by downloading its URL, and looking at the content type.

HTML documents

If it gets a content type that looks like HTML / XHTML, it will parse the page as HTML. To do this, it first uses TidyLib to turn the page into an XML document, then runs an XPath query on the content using (Libxml to find external resource URLs. It creates its own internal queue, and adds a request for each external resource it finds. Once all requests have finished downloading, it will replace the content of the URL attributes in the original HTML document with a Base64-format data URI.

CSS documents

ASIHTTPRequest handles CSS documents in a similar way, but replaces URLs with data URLs using a simple find and replace.

Currently, ASIWebPageRequest can download images, stylesheets and scripts. Stylesheets are also parsed to find external images, and these are downloaded too. It could be extended relatively easily to support more tags (eg frames / iframes) - just modify the XPath query.

Limitations

- Currently, only certain external resources are downloaded. A modern web page may contain many more that it doesn’t find (plugins etc). Additionally, many scripts fetch more data when they run. This means that there’s no guarantee all required external resources will be cached. However, on a typical page, ASIWebPageRequest should at least cut down on the loading times if most images are pulled from a previously cached version.

- The output HTML is transformed, and may well be quite different from the original. In particular, outputted HTML is XHTML with a strict doc-type - this may well break pages relying on quirks mode layout.

- TidyLib doesn’t seem to know about HTML5 yet. I’ve added some of the tags (eg header, nav etc) to allow it to preserve them, but there are probably lots more. Obviously, the resulting page will not conform to the XHTML standard, though I doubt this is likely to be a problem in most cases.

- CSS parsing is a simple find and replace affair, so certain things may cause it to break

- ASIWebPageRequest is currently rather memory hungry for complex pages, because it keeps all page data in memory at once

- ASIWebPageRequests cannot currently be run as synchronous requests

Try it out

If you’re interested in trying out ASIWebPageRequest, you can grab a copy of ASIHTTPRequest that includes it from this branch in GitHub (Direct download link). To get it working, you’ll need to link against libtidy and libxml2. I found I needed to add the libxml2 path to my header search paths to get it to find the libxml headers.

There is a working example in the Mac sample application. To get a feel for what is possible, try saving out the response HTML into a file on your desktop, then turn off your internet access, and load it into your browser.

Posted by Ben @ 12:54 PM